Thank for your hints @vbr

Unforntunately this does not work in my case.

I tried to deply the nfs-provisiones with the following command.

helm upgrade --install \

--namespace kube-system \

--set persistence.enabled=true \

--set persistence.size=1Gi \

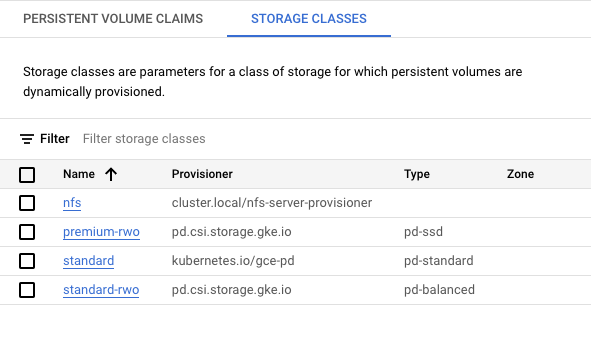

--set storageClass.name=nfs \

--set storageClass.allowVolumeExpansion=true \

--version 1.1.3 \

nfs-server \

stable/nfs-server-provisioner

and get this response

Release "nfs-server" does not exist. Installing it now.

WARNING: This chart is deprecated

Error: create: failed to create: secrets is forbidden: User "xxx" cannot create resource "secrets" in API group "" in the namespace "kube-system": GKE Warden authz [denied by managed-namespaces-limitation]: the namespace "kube-system" is managed and the request's verb "create" is denied

So I changed the namespace to default with the following response:

Release "nfs-server" does not exist. Installing it now.

WARNING: This chart is deprecated

W0731 21:05:13.689870 33041 warnings.go:70] autopilot-default-resources-mutator:Autopilot updated StatefulSet default/nfs-server-nfs-server-provisioner: defaulted unspecified resources for containers [nfs-server-provisioner] (see http://g.co/gke/autopilot-defaults)

Error: admission webhook "warden-validating.common-webhooks.networking.gke.io" denied the request: GKE Warden rejected the request because it violates one or more constraints.

Violations details: {"[denied by autogke-default-linux-capabilities]":["linux capability 'DAC_READ_SEARCH,SYS_RESOURCE' on container 'nfs-server-provisioner' not allowed; Autopilot only allows the capabilities: 'AUDIT_WRITE,CHOWN,DAC_OVERRIDE,FOWNER,FSETID,KILL,MKNOD,NET_BIND_SERVICE,NET_RAW,SETFCAP,SETGID,SETPCAP,SETUID,SYS_CHROOT,SYS_PTRACE'."]}

Nevertheless the storage class is there now, but it does not work. PVC stucks in pending mode.

So I guess additional configuration is necessary to get this working.

Do you use GKE in Autopilot or Standard -Mode ?

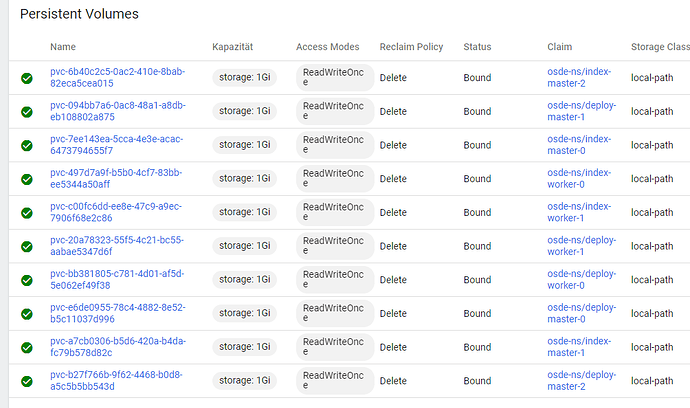

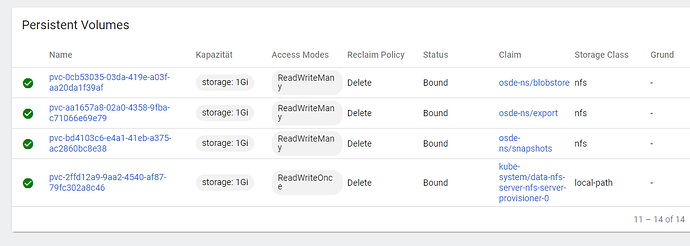

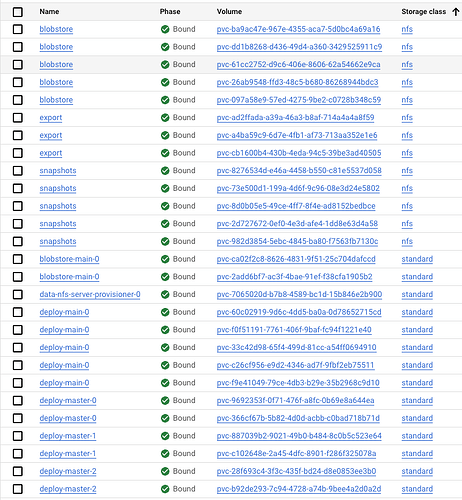

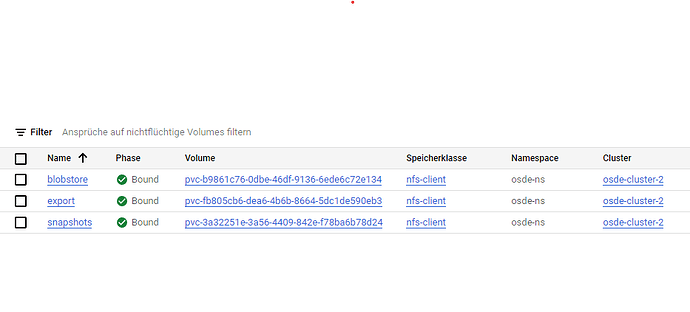

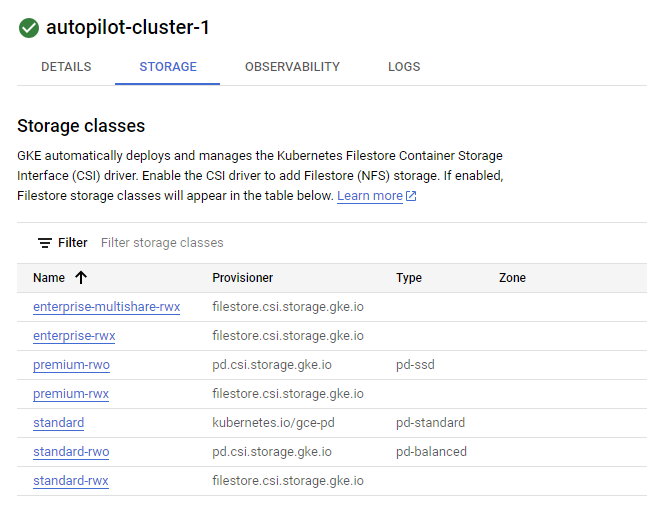

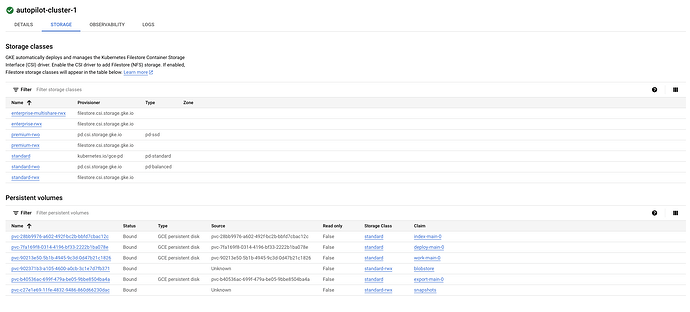

Update: I managed to get the shared storage by creating an (NFS) Filestore in Google Cloud and deploying an NFS-Client-Provisioner - but I do not see the PVCs for the standard storage!!!

Update: I get the following error when deploying the xp7deployment

2023-08-01 11:34:14,504 ERROR io.fab.kub.cli.inf.imp.cac.SharedProcessor - enonic.cloud/v1/xp7deployments failed invoking com.enonic.kubernetes.operator.xp7deployment.OperatorXp7DeploymentHelm@f

b40d01 event handler: Failure executing: POST at: https://10.68.0.1:443/apis/apps/v1/namespaces/osde-ns/statefulsets. Message: admission webhook "warden-validating.common-webhooks.networking.gke.io" denied the request: GKE Warden rejected the request because it violates one or more constraints.

Violations details: {"[denied by autogke-disallow-privilege]":["container configure-sysctl is privileged; not allowed in Autopilot"]}

Requested by user: 'system:serviceaccount:default:xp-operator', groups: 'system:serviceaccounts,system:serviceaccounts:default,system:authenticated'.. Received status: Status(apiVersion=v1, code

=400, details=null, kind=Status, message=admission webhook "warden-validating.common-webhooks.networking.gke.io" denied the request: GKE Warden rejected the request because it violates one or more constraints.

so @vbr How did you get this running ?